How mim OE & MCP Enable Scalable Context-Aware Systems

The era of stateless, cloud-bound AI is over. We’re entering a new paradigm: Agentix-Native systems that will run on endpoint devices and where the software doesn’t just wait for input but actively reasons, interacts, and acts.

To move from prototypes to production, developers need to equip AI agents running on end devices with:

- Persistent memory across interactions

- Access to real-time systems and structured data

- The ability to invoke tools, not just run inference

- Resilience across a fragmented, heterogeneous compute landscape

- And most critically: shared context and peer collaboration

This calls for two foundational layers:

- A runtime environment for all devices that brings execution to where context lives

- A protocol that standardizes how agents interact with external systems and with each other

mimik Operating and Execution Environment (mim OE) is the execution foundation, and the Model Context Protocol (MCP) adds optional structure interoperability at the content/conversation level.

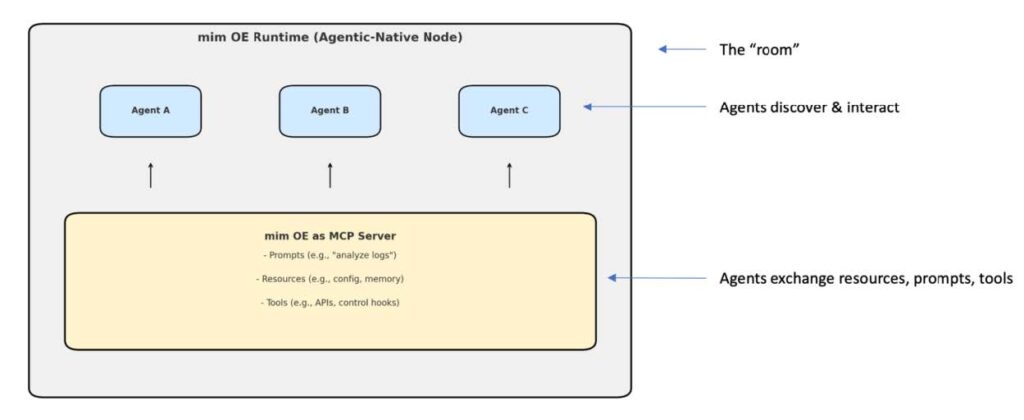

The Room and the Conversation: A Mental Model

To better understand how these components work together, imagine a meeting room:

- mim OE is the room itself: it provides the air, light, space, and seating that allow participants (agents) to be present, visible, and reachable. It ensures that agents know who is there, what’s nearby, and how to reach them. It gives them situational context.

- MCP is the conversation that happens in the room: it defines the structured language that agents use to interact. Like a shared vocabulary and grammar, MCP allows agents how to ask questions, understand available functions, request tools, and share information. It provides agent context in conversation/content level and enables meaningful collaboration.

Together, mim OE ensures agents are aware and operational and then MCP enables structured conversations when needed.

Visual Overview: mim OE + MCP Architecture

mim OE provides the secure, local environment where agents are deployed, discover one another, and operate on and near the source of data locally and across distributed systems

MCP defines how those agents can structure their interaction, dynamically discover each other’s capabilities and understand how to invoke or utilize them.

Defining Context and Enabling Agent-to-Agent Communication

MCP, introduced by Anthropic and supported by OpenAI, acts like a shared language layer. While API(s) exposes functions, but MCP adds semantic clarity. MCP is a client-server protocol that allows AI agents (clients) to access and exchange structured context from external systems (servers). It defines a normalized interface for:

- Resources: Structured data (e.g., documents, profiles, sensor logs)

- Prompts: Instruction templates that shape agent behaviour

- Tools: APIs or functions that agents can invoke to act on their environment

But here’s what makes MCP especially useful: It enables agent-to-agent communication at the conversation level.

Using MCP, agents can engage in structured dialogue, trigger shared workflows, and collaborate on decision-making: even across different nodes.

In the room metaphor, mim OE ensures agents are present, visible, and discoverable, like knowing who’s in the room and being able to walk over and talk to them. MCP is an option that adds the layer of structured conversation, it lets agents understand what others in the room can do, how to ask for it, and how to collaborate using shared meaning. Without MCP, agents must already know how to speak each other’s language. With MCP, they can discover functions and coordinate workflows even if they’ve never met before. It transforms presence into purposeful dialogue.

And because mim OE can serve as an MCP server as well, it allows local agents to expose MCP-compatible endpoints, making every room distributed and conversational.

While mim OE is where agents live, discover, and collaborate in context, MCP defines how they describe their capabilities and interact in a structured, portable, and semantically meaningful way

mim OE is a lightweight, secure runtime that runs on phones, gateways, microcontrollers, drones, vehicles, and cloud VMs, turning them into intelligent, discoverable nodes.

It includes:

- A serverless execution engine

- A local service mesh for agent discovery and peer-to-peer coordination

- Built-in offline resilience and context persistence

- Optional support to act as an MCP server for local tools, prompts, and resources

With mim OE, agents gain situational awareness of their environment including sensors, nearby agents, local memory and can function autonomously or as part of a larger distributed mesh.

Comparison: API Gateway vs MCP Server in mim OE

If mim OE includes both an API gateway and acts as an MCP server, the key difference in usage lies in who is talking to whom, how, and why. Here’s a breakdown:

| Feature | API Gateway | MCP Server |

| Primary Role | Routes and exposes REST/gRPC APIs to external consumers | Provides structured, standardized access to contextual resources for AI agents |

| Audience | External apps, services, clients (traditional or AI agents) | AI agents (clients) that want to know the language and function of other agents in order to query tools, prompts, or structured resources |

| Interaction Style | Request-response (generic APIs) or streaming (active APIs) | Semantic-driven structured dialogue (agent-to-agent or agent-to-resource) |

| Use Case | Exposing microservices as APIs (e.g., /Locations, /Configs) | Enabling agents to discover functions and semantics in order to talk to tools, prompts, other agents (e.g., get_tool(tool_id)) |

| Protocol | REST, gRPC, etc. | Model Context Protocol (MCP) |

| Client Type | Any client apps/ solutions/agents at the level of resource exposure | AI agents at Semantic level |

| Security Context | API tokens, headers, ACLs | Agent context, identity scopes, structured context boundaries |

Example

Imagine you’re running a smart home hub using mim OE:

- The API Gateway exposes /light/on or /temperature/current to apps or humans.

- The MCP Server exposes tools like turn_on_light() or query_temperature() that other agents (e.g., voice assistant, energy optimizer) can use in structured workflows.

Analogy (Extending the Room Metaphor)

- API Gateway is like a reception desk in the room: humans or external systems walk in and make explicit requests like “turn on the light” or “get the weather.”

- MCP Server is like an internal team whiteboard + toolshed: agents inside the room use it to access shared resources, prompts, and tools, and to talk to each other with structure and context.

API Gateway and MCP: Complementary Interfaces and Roles:

- API Gateway is essential for interoperability with both agent and non-agent systems, mobile apps, agents, cloud services, dashboards, and external clients. It exposes REST or gRPC endpoints that allow systems to invoke resources directly, regardless of whether they are agentic.

- MCP Server is essential for building AI Agents systems where agents need to reason, discover, and collaborate autonomously.

They’re complementary: the API gateway makes mim OE useful to the outside world, while MCP makes it powerful for internal agent collaboration.

Example: Agent Collaboration in a Smart Vehicle

Imagine a smart vehicle running mim OE:

- A driver behavior agent monitors sensors and adapts behavior in real time

- A navigation assistant queries an MCP tool for optimized routing

- A fleet coordination agent uses MCP to communicate with nearby vehicles

All three agents are microservices running locally in mim OE. They are aware of each other’s presence (thanks to mim OE), and they speak via MCP, enabling dynamic coordination.

Why It Matters to Developers

Without mim OE, agents are isolated, unable to execute flexibly by discovery, or collaborate and coordinate across nodes based on real-time context. There’s no shared environment that enables them to discover, communicate at API level, and act across different systems depending on network, trust, authorization or situational proximity.

Without MCP, agents may be present but lack a shared structure for understanding each other’s capabilities. Developers must define ahead of time which agents need to talk, what they offer, and how they operate, essentially preloading the “language” and “vocabulary” for interaction.

With mim OE + MCP, developers get:

- A clean architectural separation between runtime execution and interaction semantics (MCP)

- On-the-fly, context-aware collaboration across any node—local or remote—based on network visibility, access permissions, and relevance

- An open, structured way to enable agent-to-agent conversations without predefined knowledge

- Local-first deployment with low latency and privacy control and yet work across clouds.

- Cross-node collaboration without centralized dependencies

Getting Started

To build real-world Agentic-Native systems:

- Deploy mim OE on supported devices (Linux, Android, Windows, iOS, etc.)

- Wrap agents as microservices with internal APIs or MCP endpoints

- Enable mim OE as an MCP server to host local tools, prompts, and resources

- Use MCP clients in agents for structured, interoperable communication

- Build for offline-first collaboration, not cloud dependency

The Future Is Contextual and Conversational

Agentic-Native AI is not a monolithic cloud model. It’s distributed. It’s local. It’s peer aware.

With mim OE providing the room, and MCP enabling the conversation, developers can now build intelligent systems that are:

- Scalable

- Modular

- Resilient

- Real-time

- And most importantly: collaborative

Agent-based AI isn’t a cloud-centric model, it’s a context-first, conversation-driven, and everywhere-enabled model.

With MCP powering agent conversations, and mim OE hosting them wherever context lives, developers have everything needed to build the next generation of intelligent systems: open, interoperable, and edge native.

This is how agentic native systems are operationalized at scale.

- Check out our QuickStart Guides and Tutorials.

- Create your developer account

- Need technical help? Reach out to our support team.

- Need help or looking to partner? Get in touch with us at partners@mimik.ai.